Author(s): Juha Jylhä; Minna Väilä; Marja Ruotsalainen; Juho Uotila ![]()

DOI: 10.14339/STO-SET-273-03 | ISSN: TBD

Synthetic Aperture Radar (SAR) imaging using a small and lightweight aircraft, such as a drone, sets special requirements on the image formation. Such an aircraft is unable to maintain strictly straight and level flight as it is easily susceptible to the small effects of the atmosphere, such as wind. With conventional SAR methods, a highly fluctuating flight trajectory leads to compromises in image focusing causing distortions in the image

Citation:

ABSTRACT

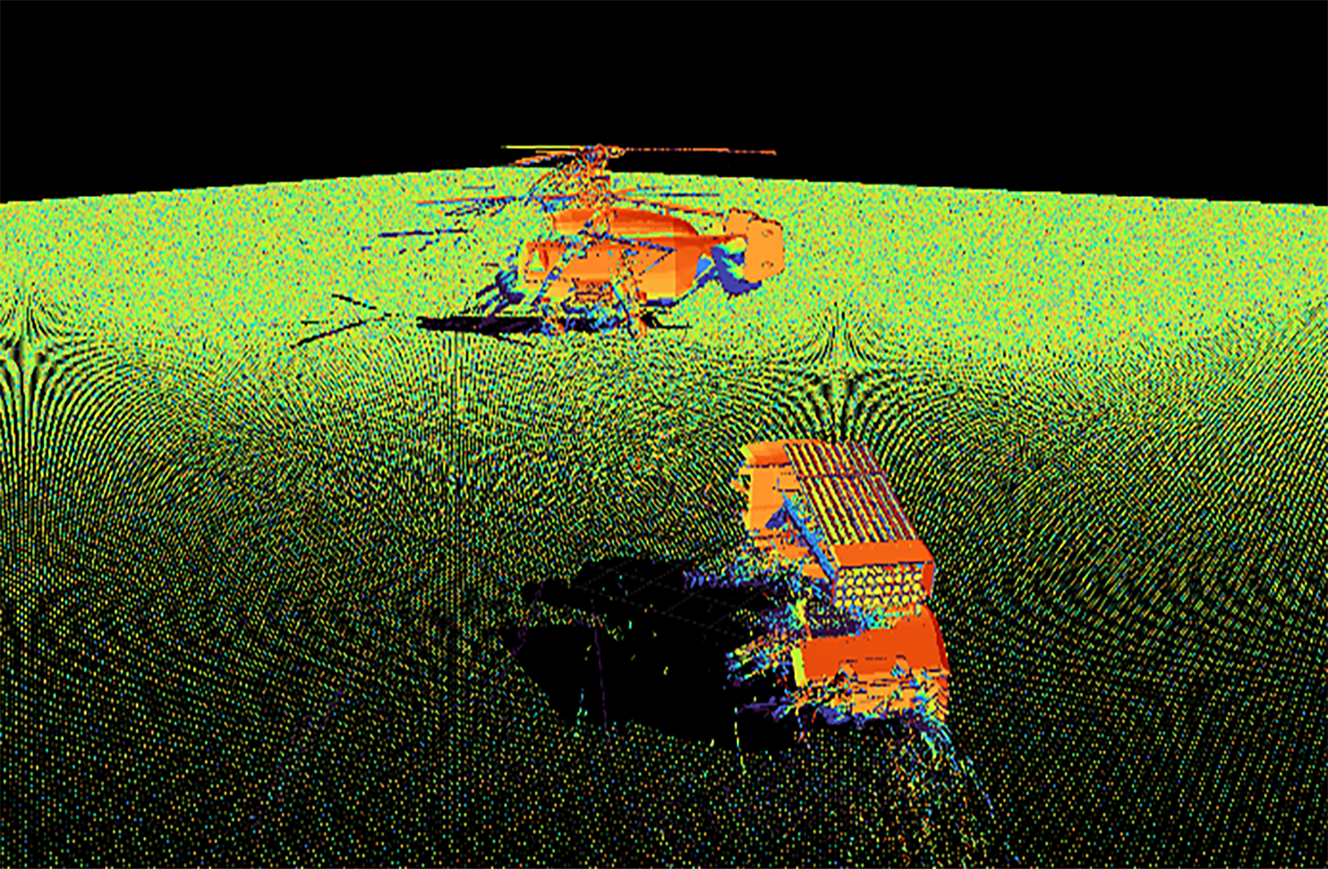

Synthetic Aperture Radar (SAR) imaging using a small and lightweight aircraft, such as a drone, sets special requirements on the image formation. Such an aircraft is unable to maintain strictly straight and level flight as it is easily susceptible to the small effects of the atmosphere, such as wind. With conventional SAR methods, a highly fluctuating flight trajectory leads to compromises in image focusing causing distortions in the image. In addition, harsh inaccuracies emerge when registering the image with other information sources: other SAR images of the same scene or geographic information, such as maps or orthoimages. The integration of multiple sources or multiple angles of view improves the detection, recognition, and identification of the objects in the image. In this paper, we consider combining the registration procedure with the SAR image focusing algorithm. We demonstrate our approach through an experiment using a SAR system consisting of a Commercial Off-The-Shelf (COTS) lightweight drone with a COTS sensor payload: a Frequency-Modulated Continuous-Wave (FMCW) radar operating at K-band and synchronized with a navigation unit. An accurately focused and georegistered stripmap SAR image with a resolution of 10 cm is reconstructed in the case of a trajectory challenging for conventional methods. The implementation and its relevance to different levels of data fusion, especially image or pixel-level fusion, is discussed.

DOWNLOAD FULL ARTICLE